eSense OVERVIEW

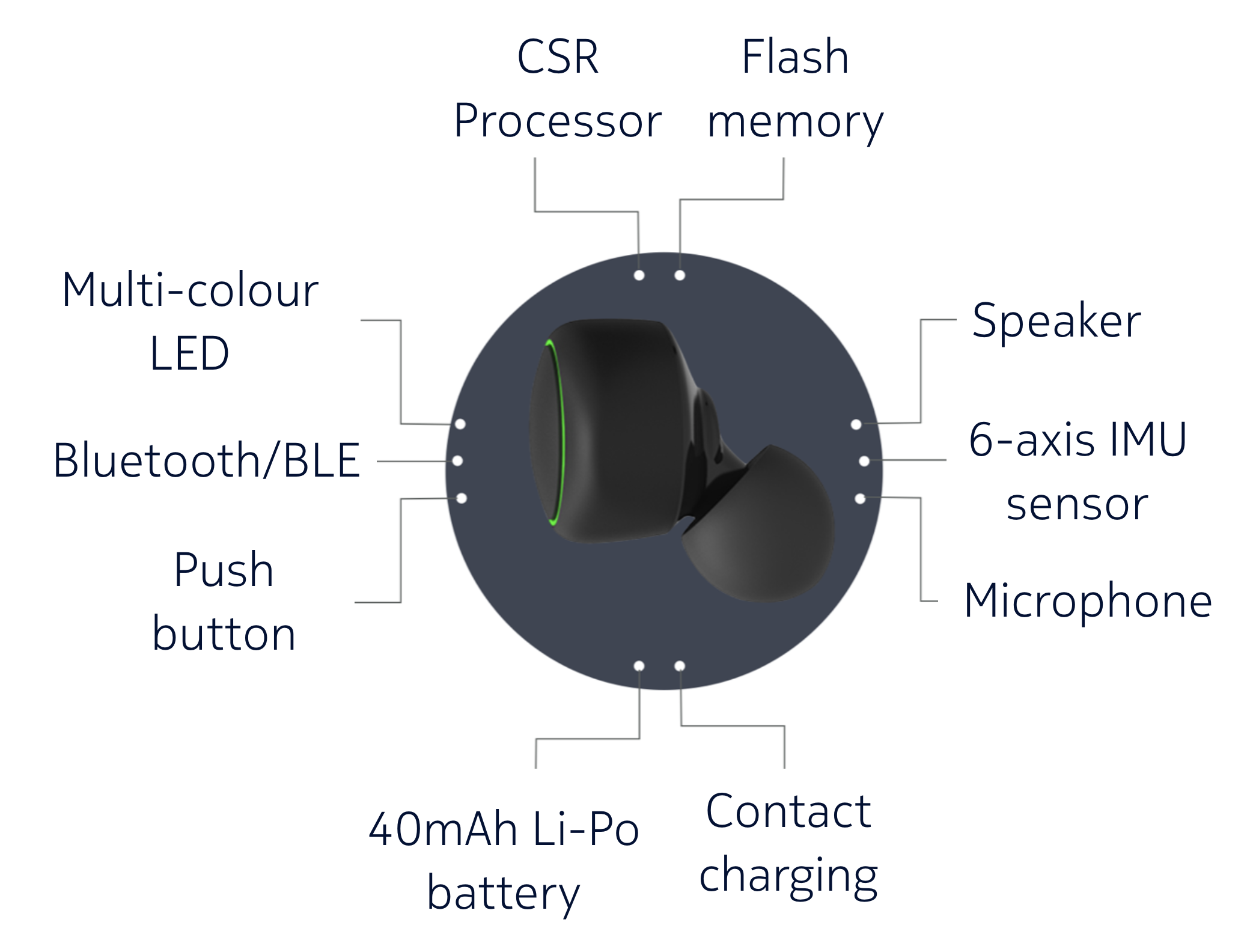

eSense is a multi-sensory earable platform for personal-scale behavioural analytics research. It is a True Wireless Stereo (TWS) earbud augmented with a 6-axis inertial motion unit, a microphone, and dual mode Bluetooth (Bluetooth Classic and Bluetooth Low Energy). eSense is built with a custom-designed 15 × 15 × 3 mm PCB and composed of a Qualcomm CSR8670, a dual-mode Bluetooth audio system-on-chip (SoC) with a microphone per earbud; a InvenSense MPU6500 six-axis inertial measurement unit (IMU) including a three-axis accelerometer, a three-axis gyroscope, and a two-state button; a circular LED; associated power regulation; and battery-charging circuitry. There is no internal storage or real-time clock. It is powered by an ultra-thin 40-mAh LiPo battery. The carrier casing is equipped with a battery enabling recharging of eSense earbuds on the go (up to 3 full charges). Each earbud weights 20 g and is 18 × 20 × 20 mm.

Please check the IEEE Pervasive Computing article on eSense for more details.

If you use eSense in your research project, we would appreciate if you kindly cite the following two papers.

[1] Fahim Kawsar, Chulhong Min, Akhil Mathur, and Alessandro Montanari,. "Earables for Personal-scale Behaviour Analytics", IEEE Pervasive Computing, Volume: 17, Issue: 3, 2018

[2] Chulhong Min, Akhil Mathur and Fahim Kawsar . "Exploring Audio and Kinetic Sensing on Earable Devices", In WearSys 2018, The 16th ACM Conference on Mobile Systems, Applications, and Services (MobiSys 2018), June 2018 , Munich, Germany.

Unfortunately, our generation one eSense platform has reached its end of life, and we are not distributing it anymore. Please keep an eye on this website, as we are working on generation 2 which will have richer and enhanced sensing and on-device ML capabilities.